The Future of AI: A Glimpse into Superintelligence

Hi all,

NeurIPS 2024 awarded Test of Time to Sequence to Sequence Learning with Neural Networks and Llya gave a great talk.

Sutskever began by looking back at a talk he gave 10 years prior, in 2014, where he presented the “Deep Learning Hypothesis.” The core idea was that a large neural network with 10 layers could perform any task a human could do in a fraction of a second. This was based on the assumption that artificial and biological neurons are similar and that real neurons are slow. While this hypothesis proved largely correct, the field was still in its early stages, and some of Sutskever’s predictions didn’t quite pan out.

One of the key ideas of deep learning is connectionism, which holds that a large artificial neural network can be configured to do many of the same things humans can. This has led to the age of pre-training, where very large neural networks are trained on huge datasets. Sutskever mentioned the GPT-2, GPT-3, and scaling laws as examples of this progress. However, he believes that pre-training as we know it will eventually end because while computing power is growing through better hardware, algorithms, and larger clusters, data is not increasing at the same pace, but not all researchers think the same. Logan says “Pre-training is only over if you have no imagination”.

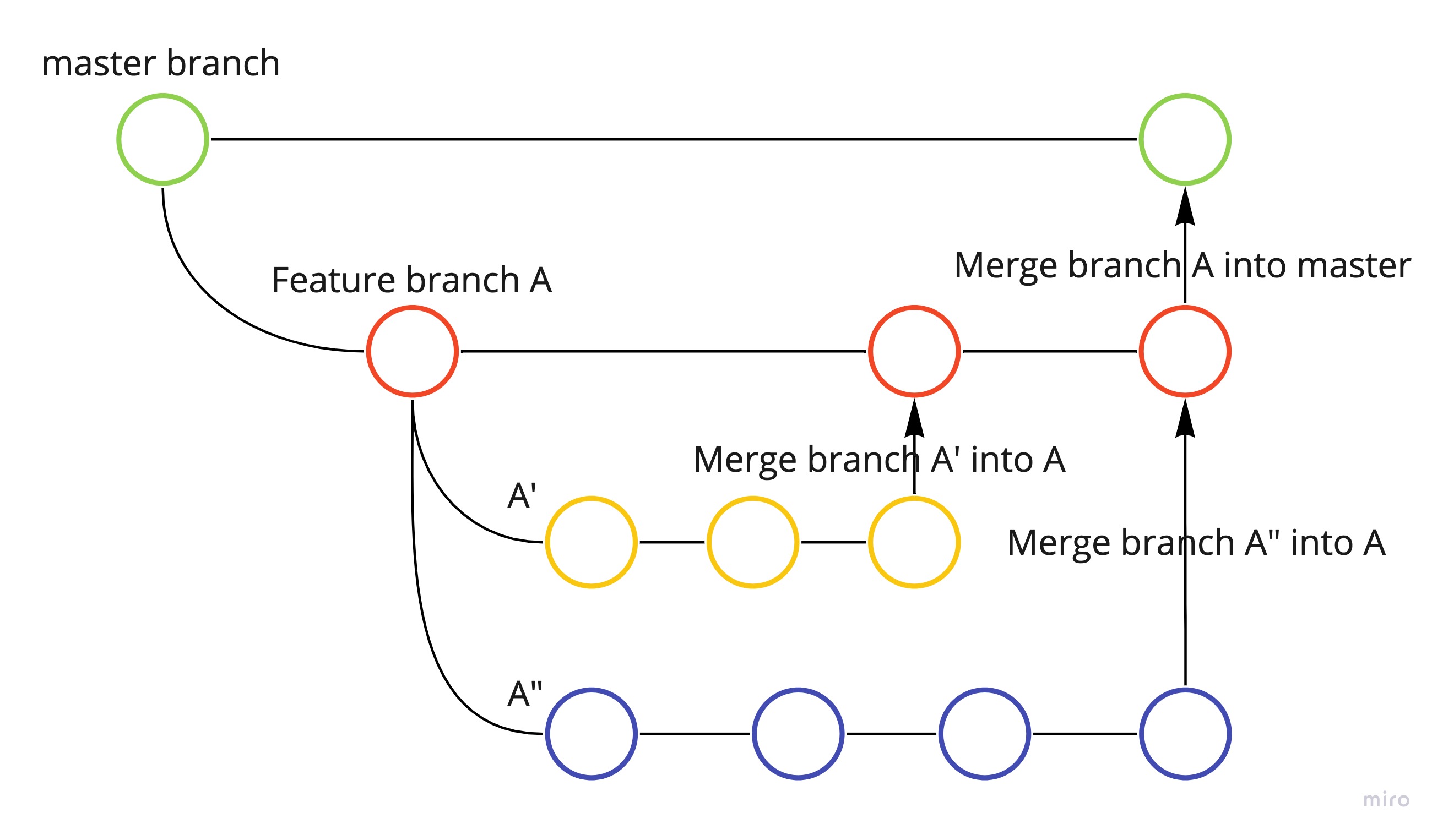

Sutskever then shifted his focus to the longer term, speculating about the future of superintelligence. He mentioned two potential developments: agents and synthetic data. Agents are AI systems that can act autonomously, while synthetic data is artificially generated data that can be used to train AI models. He sees both of these as promising areas for future research.

Finally, Sutskever touched on the concept of superintelligence. He believes that superintelligence will be qualitatively different from what we have today and that it will be characterized by the following properties:

- Agentic: Superintelligent systems will be able to act autonomously in the world. Recently Satya proclaimed on BG2 that “SaaS are CRUD databases with business logic. As AI Agents take over that logic, SaaS will collapse”. The agentic paradigm might not collapse SaaS in totality, but most of the wrapper startups will vanish.

- Reasons: They will be able to reason and understand things in a way that is far beyond current AI systems. If we look at the current OpenAI’s O3 and Google’s Gemini 2.0 Flash thinking models, we will be moving there fast.

- Understands: They will be able to understand from limited data, without getting confused. Extrapolative is a limitation of traditional ML, so will new AI models overcome this barrier?

- Is self-aware: This could be a useful feature, as our sense of self is part of our own world models. Many models today try to identify themselves with some personality when asked intriguing questions. Are large models becoming more and more aware?

Sutskever acknowledges that it is impossible to predict exactly what superintelligence will look like. However, he believes that it will be a radical departure from current AI systems and that it will have incredible and amazing capabilities. He encourages people to reflect on these possibilities and to imagine what the future of AI might hold.

Thanks,

Ashish

Leave a comment