Why does context engineering matter?

Hi All,

We’ve long thought clever prompts were the secret sauce of AI and spent innumerable hours tunning them – until recently. Pioneers like Andrej Karpathy believe the magic isn’t just in crafting a witty question, but in supplying the AI with all the background it needs. As experts states context engineering is “the delicate art and science of filling the context window with just the right information for the next step”. In plain terms, it’s like giving an AI the entire blueprint (documents, data, tools, etc.) rather than a one-line instruction.

What is Context Engineering?

Context engineering means preparing the AI’s workspace – loading its “desk” with everything it needs to solve a problem – instead of handing it a blank slate. In practice, an LLM’s context can include much more than the immediate query: system instructions, user prompts, chat history, retrieved documents, tool definitions, and even external memory. It’s about designing the entire information ecosystem for the model. As Philipp summarized it, context engineering is “designing and building dynamic systems that provide the right information and tools, in the right format, at the right time” for an AI agent. In other words, it’s not just writing a clever prompt – it’s making sure the AI has the supporting facts, examples, and data it needs to answer reliably.

Prompt vs. Context Engineering: A Paradigm Shift

In classic prompt engineering, we focus on phrasing the question well. Context engineering is a bigger picture: it focuses on all the information around that question. Unlike a static prompt, context engineering is dynamic and multi-layered. It may involve retrieving fresh data at runtime, maintaining conversation memory, and orchestrating tool calls behind the scenes. In short, we’ve moved from “just give me a better prompt” to “build the whole stage on which the AI performs.” For example, Karpathy contrasts the two by noting that people often think of prompts as short task descriptions, but real LLM applications demand “task descriptions and explanations, few-shot examples, RAG, related (possibly multimodal) data, tools, state and history” – all carefully packed into the context window. Prompt engineering is like supplying a single clue; context engineering is like supplying the detective’s entire case file.

I liked the way Sebastian framed it below. When I am building a consumer-facing application, I want my end user to get the best results with minimal but relevant content. It is my responsibility as a builder to handle all the nuances (RAG, HyDE, grounding, etc.) to generate quality results.

Managing LLM Context Windows

LLMs are powerful but stateless – they only know what you feed them. In practice, an AI model only “knows” whatever information is in its context window at inference time. If you give it an empty or incomplete window, it simply guesses (often hallucinating). That’s why context is critical: with good context, the model has the facts and history it needs; without it, the model becomes like a genius locked in isolation. Modern LLMs have enormously large context windows (Gemini 2.5 Pro with 1m tokens), but they are still finite. I think “1m context window” is more of marketing highlight. The performance degrades after filing 10-20% of the context window. We must curate that space carefully. Strategies include summarizing or compressing earlier conversations, filtering out irrelevant details, and using external memory stores. These tactics turn a limited context window into a continuously useful knowledge base. By contrast, without such context engineering an LLM might forget earlier details or hallucinate about unknown facts.

Techniques and Tools for Context Engineering

Context engineering uses several key techniques to give LLMs real-world knowledge and persistence:

Retrieval & Tool Calls (RAG): Connect the LLM to search indexes, databases, or APIs. At runtime the system fetches relevant documents or computes answers (via tools like calculators) and injects these into the context. For example, an AI assistant might query a company’s document store for the latest policy and then include that text in the prompt so the model’s answer is grounded in up-to-date facts.

Memory & Long-Term State: Maintain ongoing context beyond one chat turn. Agents keep track of conversation history and user data; they summarize or store key points so the AI “remembers” them later. This way, a customer support bot remembers your device details across a session, or a research assistant carries over facts from one question to the next. The model’s context is continuously updated, not reset on each query.

Multi-Modal Context: Go beyond text. Modern systems can feed images, audio, or even sensor data into the AI. For instance, an app might use your phone’s camera or microphone, turning visual or auditory information into context that the LLM can understand. (Karpathy specifically notes that high-end context may include “possibly multimodal” data .) This grounds AI output in real-world inputs – an AI can answer not just from text prompts, but from pictures or live readings.

In practice, frameworks like LangChain and LlamaIndex have emerged to automate these patterns. They let developers plug in memory components and vector databases so that chains of prompts, tools, and data retrieval happen automatically. Using these libraries, engineers don’t have to build context pipelines from scratch – the tools handle caching, retrieval, and memory management under the hood.

Real-World Impact: Context-Engineered AI in Action

These ideas are not just theory – companies are already using them to build smarter assistants. For example, many enterprises now connect LLMs to their internal data via RAG. A finance firm might deploy a GPT-based chatbot that instantly searches up-to-date market reports and feeds them into its replies; a law firm might have an assistant that scans legal memos on demand and cites the correct statutes. Even major AI platforms support context engineering: OpenAI’s chat API includes a persistent system message (a basic form of context), and Azure’s Cognitive Search can be paired with GPT to search company documents and include the results in the prompt. In short, tools and cloud services are evolving to treat context not as an afterthought but as the core of AI performance

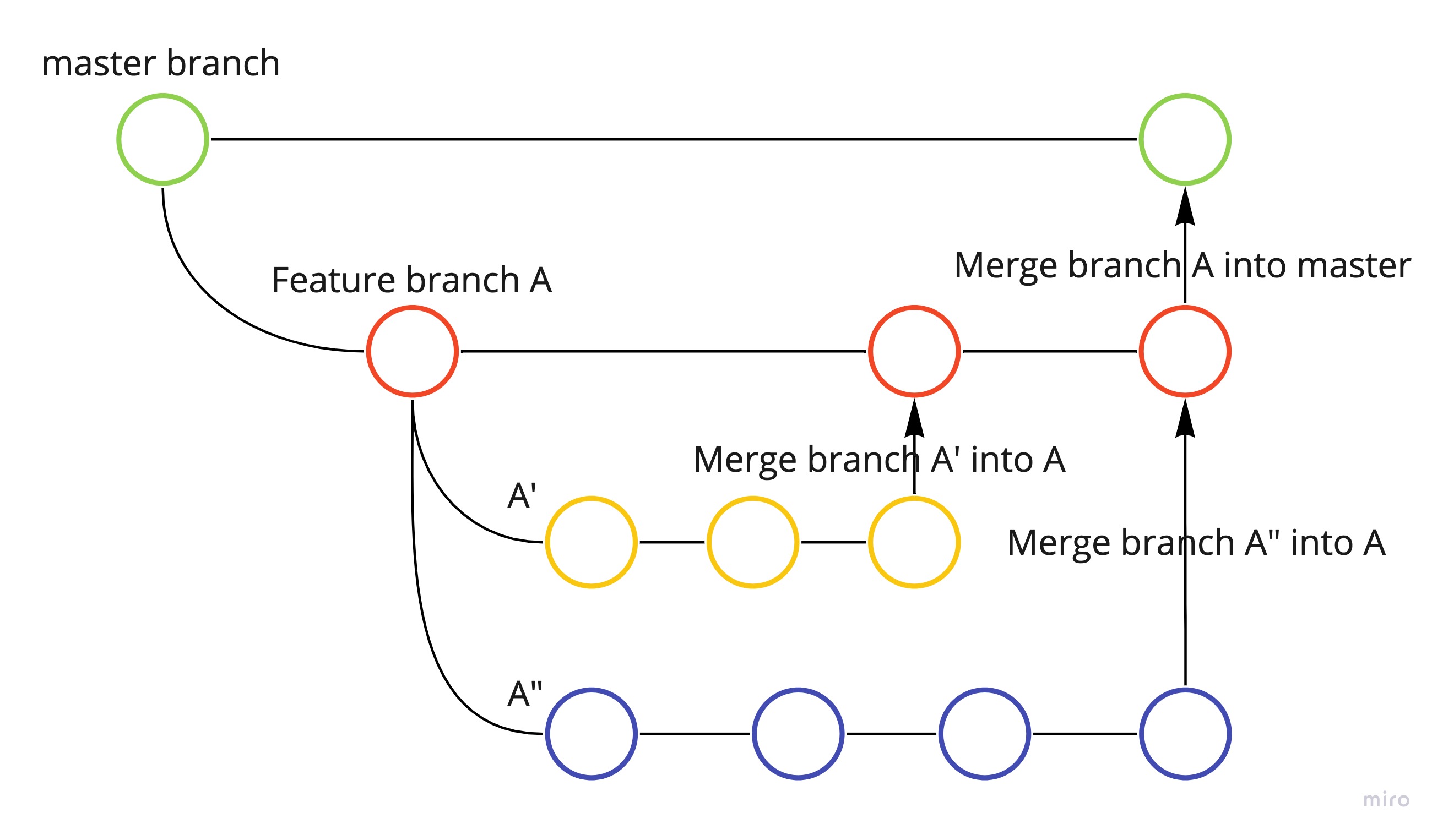

The Future of Context-Aware AI

We’re just scratching the surface of what context-aware AI can do. Tomorrow’s systems may treat everything as context. Imagine an augmented-reality assistant that “sees” what you see through your camera, or a home AI that listens to background sounds – all that sensory data becomes part of the context. Emerging standards point in this direction. With MCP, an AI could automatically fetch real-time info or activate external services, seamlessly enriching its context with live data. Ultimately, context engineering is paving the way to AI that perceives the world rather than just parroting text. By engineering context windows to include long documents, databases, multiple languages or media, and live inputs, we unlock capabilities far beyond any one prompt. The result is an AI assistant that can explain, reason, and even act with a depth of understanding on par with a well-informed human – or better. The future is one where context-aware agents constantly integrate new information, build on past interactions, and bridge the gap between AI and the real, dynamic environment we live in. In short, context engineering shifts our focus from “what single instruction do I give the model?” to “what entire knowledge ecosystem do I create for it?” Those who master this discipline – curating data sources, managing context windows, and integrating tools – will build AI systems that are far smarter, more reliable, and more adaptable than what prompts alone could achieve.

09/29/2025- Anthropic released a blog elaborating on the context enginnering for AI agents.

The latest research on Agentic Context Engineering (ACE) proposes evolving LLM contexts as adaptive playbooks—not just static prompts. Instead of losing valuable insights to brevity, ACE’s modular, incremental updates ensure detailed knowledge persists and improves over time. By mimicking human learning through autonomous generation, reflection, and curation, this approach future-proofs LLM agents for self-improvement and enables practical, scalable deployment in specialized domains

Thanks, Ashish

Leave a comment