Is Model Context Protocol the future of AI development?

Hi All,

The Model Context Protocol is a standard proposed by Anthropic for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments. MCP is a universal connector that enables Large Language Models (LLMs) to access real-time data and execute actions dynamically through standardized integrations.

The popularity and adoptability are just growing. Sam has just announced support for MCP, and it is great news for developers as it opens up more doors. Google has just released MCP support.

How Does MCP Work (The Basics)?

At its core, MCP operates on a client-server architecture, a fundamental computing model where tasks are divided between service requesters (clients) and service providers (servers). This interaction can be easily understood through the analogy of ordering a pizza. In this scenario, the individual placing the order acts as the client, initiating a request for a pizza. The pizza store, which receives the order, prepares it, and delivers it, functions as the server, providing the requested service. Similarly, in MCP, an AI application needing external resources acts as the client, communicating with an MCP server that provides access to specific data or tools.

MCP Host/Client: It is essentially an AI application that requires external data or specific functionalities to enhance its capabilities. Beyond the initial examples of Integrated Development Environments (IDEs), chatbots like Claude Desktop, or custom workflow tools, a multitude of real-world AI applications commonly necessitate external data

MCP Server: It is a lightweight program that exposes specific data sources or tools through the standardized MCP interface. This allows AI clients to interact with a wide array of resources. Examples of data sources and tools that can be exposed via an MCP server include Customer Relationship Management (CRM) systems like Salesforce and HubSpot, cloud storage services such as Google Drive, Dropbox, and AWS S3, various types of databases (SQL and NoSQL), local or network file systems, and external APIs like GitHub and Slack, payment processing gateways like Stripe and PayPal, weather APIs, and the Google Shopping API. Moreover, MCP servers can expose specific functionalities within software applications, such as document processing capabilities, code execution environments, or data analysis tools. The flexibility of MCP also allows for the creation of internal, company-specific servers, providing restricted access to sensitive data and tools, thereby enhancing security.

The Protocol: It defines the standardized communication rules that govern the interaction between MCP clients and servers. These rules encompass several key aspects, including the process by which an AI client can discover the available data sources and tools exposed by an MCP server. The protocol also specifies the standard format for an AI client to request data from the server and to instruct the server to execute actions using the available tools. Furthermore, MCP incorporates mechanisms for managing security, ensuring that data is accessed and utilized securely. Finally, the protocol supports various transport mechanisms for communication, such as WebSockets and Server-Sent Events (SSE).

When the AI client needs information or needs to act (e.g., “Summarize the latest changes in the ‘HCP’ folder on Google Storage Bucket”), it communicates with the relevant MCP server using the protocol. The server then interacts with the actual data source or tool and sends the necessary information back to the AI client.

Why Does MCP Matter? Key Benefits:

MCP offers significant advantages for developers, users, and the AI ecosystem as a whole:

Standardization & Simplified Integration: Reduces the complexity of connecting AI to external systems. By establishing a unified protocol, MCP drastically reduces the complexity of connecting AI models to external systems, effectively solving the “M x N integration problem” by transforming it into a more manageable “M + N” scenario. This means that developers can build a single MCP server for a particular tool or data source, and multiple AI clients can then utilize it without requiring custom integrations for each client. Furthermore, the potential for creating department- or company-specific servers with restricted access provides a robust mechanism for managing data security and governance

Flexibility & Interoperability: The protocol allows for easy switching between different LLM providers or AI clients without the need to rebuild integrations from scratch. This fosters a richer ecosystem of compatible tools and applications, promoting innovation and providing users with greater choice. MCP’s model-agnostic nature ensures that it can be used across various AI models, including proprietary and open-source options.

Enhanced AI Capabilities: By enabling access to real-time, relevant data and the ability to perform actions through exposed tools, AI can generate more accurate, practical, and context-aware responses and workflows. For instance, AI agents can perform multi-step tasks across different systems, understand their environment through IoT device integration, collaborate with other AI agents, and provide deeply integrated personal assistance. The ability to access real-time data also opens up possibilities for applications like financial modeling and personalized e-commerce recommendations

Improved Security: The protocol provides best practices and mechanisms for securely exposing data and managing access, often keeping sensitive information within an organization’s infrastructure. MCP incorporates security features such as connection isolation, granular permissions, data masking, encrypted transmissions, and centralized logging. It can also function as a secure API gateway for AI, addressing concerns about unmonitored access and potential data exfiltration. Security and governance layers like MCP Guardian further enhance the security posture of AI systems, utilizing the protocol

Better Developer Experience: MCP leads to a better developer experience by simplifying the process of building sophisticated AI agents and workflows. The reduction in custom coding requirements, the availability of pre-built servers, and the standardized interface and communication protocol make it easier for developers to integrate AI with various data sources and tools.

Scalability: MCP supports scalability by accommodating various communication methods and facilitating the addition of new tools and data sources as needed. The modular design of MCP allows for the independent scaling of AI models and tool integrations, ensuring that AI applications can adapt and grow over time

The Pre-MCP Era: Navigating the Complexities of AI Integration

Before the advent of standardized protocols like MCP, integrating AI with external systems presented significant challenges and complexities. Developers often had to write custom code or rely on specialized plugins for each individual data source or API they wanted to connect to an AI model (we did it for our project). This resulted in intricate and often fragile systems, where managing and maintaining integrations became a substantial burden. The “M × N integration problem” perfectly illustrates this difficulty, where the effort required to connect M different AI models to N different external tools grows exponentially, creating a significant barrier to widespread AI adoption and utility.

Furthermore, organizations often struggled with data silos, where crucial data resided in isolated systems and applications across different departments, making it incredibly challenging to access and unify information needed for comprehensive AI analysis and action. Data incompatibility posed another major hurdle, as data from various sources often came in diverse formats, structures, and with inconsistent naming conventions, requiring significant effort for mapping, transformation, and harmonization before AI models could effectively use it. The lack of interoperability between different systems further compounded these issues.

The pre-MCP era was also characterized by increased engineering complexity and system overhead. Maintaining context across numerous custom integrations was a difficult task, and ensuring consistent security and governance across these disparate connections was a significant concern. These challenges collectively hinder the seamless integration of AI with the real world, limiting the potential of AI applications to deliver truly impactful solutions.

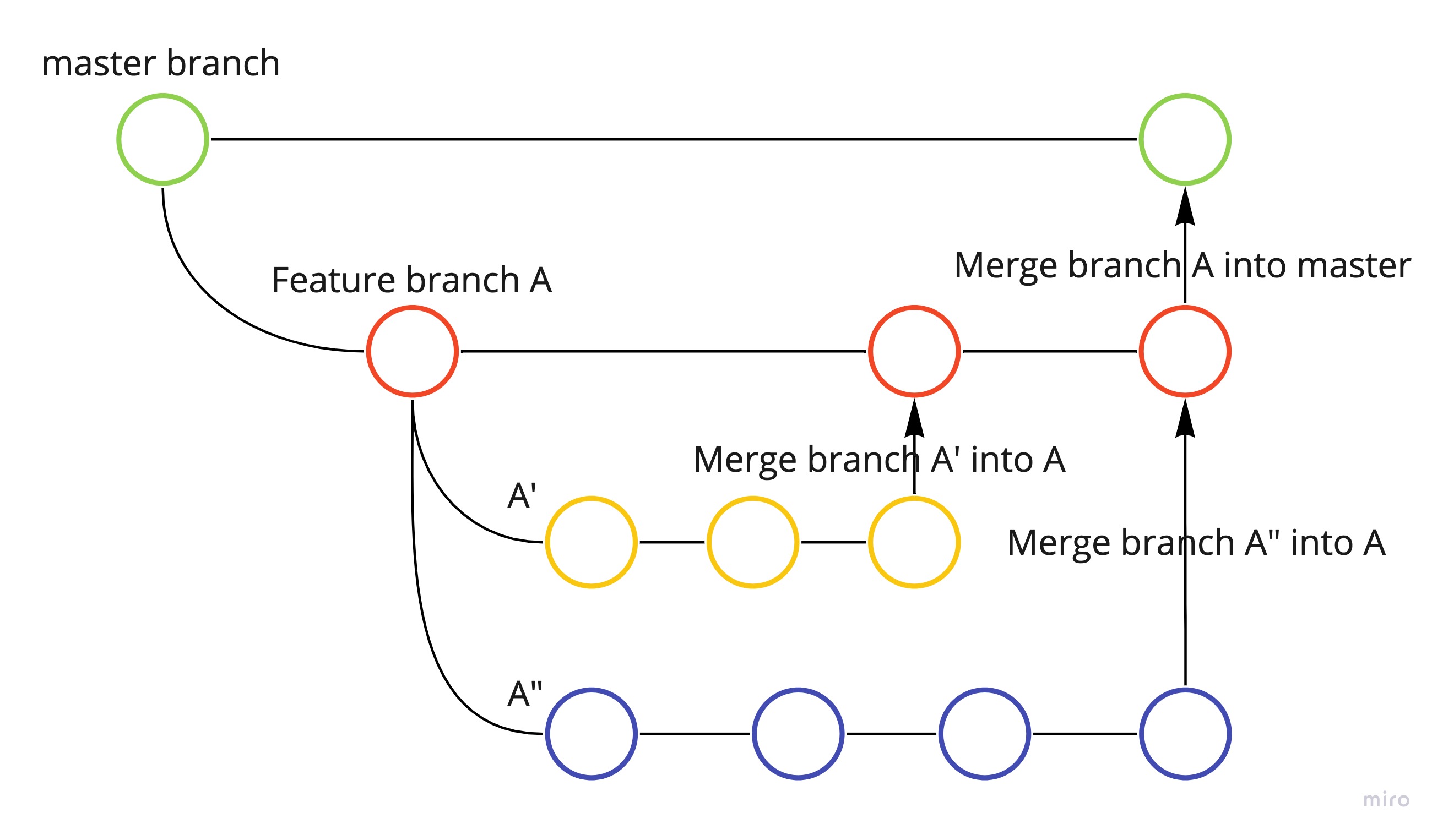

Looking Ahead: The Future of MCP and AI Integration

The future of MCP and AI integration holds significant promise, particularly in the areas of authentication, authorization, and client experience.

In terms of authentication, future trends point towards more sophisticated and adaptive mechanisms within the context of AI and data integration. As MCP facilitates access to a wider range of sensitive data, robust authentication will be crucial to protect against unauthorized access. Emerging trends include the use of AI-powered fraud detection to identify increasingly sophisticated threats like deepfakes and synthetic identities. Adaptive authentication, leveraging AI and behavioral analytics, will likely become more prevalent, dynamically adjusting access controls based on real-time risk assessments. The move towards passwordless authentication methods, such as passkeys and biometrics, will continue to gain traction, offering enhanced security and user convenience. Continuous authentication, utilizing behavioral biometrics and AI to verify a user’s identity throughout their session, will also significantly bolster security.

The future of authorization in AI will focus on more dynamic and context-aware models, and standardized protocols like MCP are poised to play a vital role in enabling secure data access and fine-grained management of permissions. Trends like attribute-based access control (ABAC), where access is granted based on specific user and resource attributes, are expected to become more widespread. AI-driven authorization systems will continuously evaluate context, user intent, and potential risk profiles to make intelligent access decisions. Ongoing standardization efforts in authorization protocols, such as AuthZEN, will contribute to a more unified and interoperable security landscape. Integrating authorization mechanisms directly within AI pipelines, as facilitated by protocols like MCP, will allow for real-time enforcement of security policies. MCP’s planned centralized authorization features will further streamline the permissions management for AI interactions with external systems.

Improving the client experience when AI interacts with external systems through standardized protocols like MCP is paramount for widespread adoption and user satisfaction. Personalization will be a key focus, with AI leveraging data and preferences accessed via MCP to provide tailored recommendations and customized experiences. Efficiency will be enhanced as AI agents utilize MCP to automate complex tasks and streamline user workflows, reducing manual effort. The seamless integration provided by MCP will contribute to a more unified and intuitive user experience across various AI applications and services. AI-powered chatbots, utilizing MCP to access comprehensive knowledge bases, will offer more accurate and real-time customer support. Data standardization, facilitated by AI and protocols like MCP, will ensure data consistency, leading to more reliable and accurate AI interactions. Furthermore, AI’s ability to anticipate user needs based on data accessed through MCP will enable proactive assistance, further enhancing the overall user experience.

Thank you!!

Leave a comment