Design Thinking in machine learning lifecycle

Hi All,

Design thinking is a problem-solving approach that focuses on creating innovative solutions that meet the needs and desires of users. When applied to machine learning problems, it involves identifying the key challenges and opportunities associated with a problem, generating creative ideas for potential solutions, and then prototyping and testing these solutions to refine and improve them.

Design thinking can be applied to problems related to making large language models more accessible to stakeholders in large companies. Things look different when we are building solutions for internal stakeholders or designing products for external customers. Here are the stages involved in applying design thinking to an LLM problem:

Leader Group:

The LLM engagement project involves analyzing unstructured data and identifying potential risks. This will require a diverse team of leaders, including:

- Data scientists and machine learning experts

- Security and compliance experts

- Data governance experts

- Business leaders and/or consulting partners

- Full-Stack developers

Empathize:

The first stage of design thinking involves understanding the needs and challenges of stakeholders who work in large companies and may face barriers in accessing large language models. This could include:

- Employees from different departments or teams with varying levels of access controls

- Employees with different job roles or responsibilities

- Employees with different levels of technical expertise

- Security and compliance concerns to avoid proprietary data leakage to LLM API service providers

The product team may need to conduct user research or engage with stakeholders to understand their respective needs and challenges.

Define:

Once the needs and challenges of stakeholders have been identified, the next step is to define the problem that needs to be solved. This could involve identifying the key barriers that prevent employees from accessing large language models, such as limited access to technology, lack of training or education, or lack of integration with existing systems or workflows.

Ideate:

The ideation stage involves generating creative ideas for potential solutions. This could include:

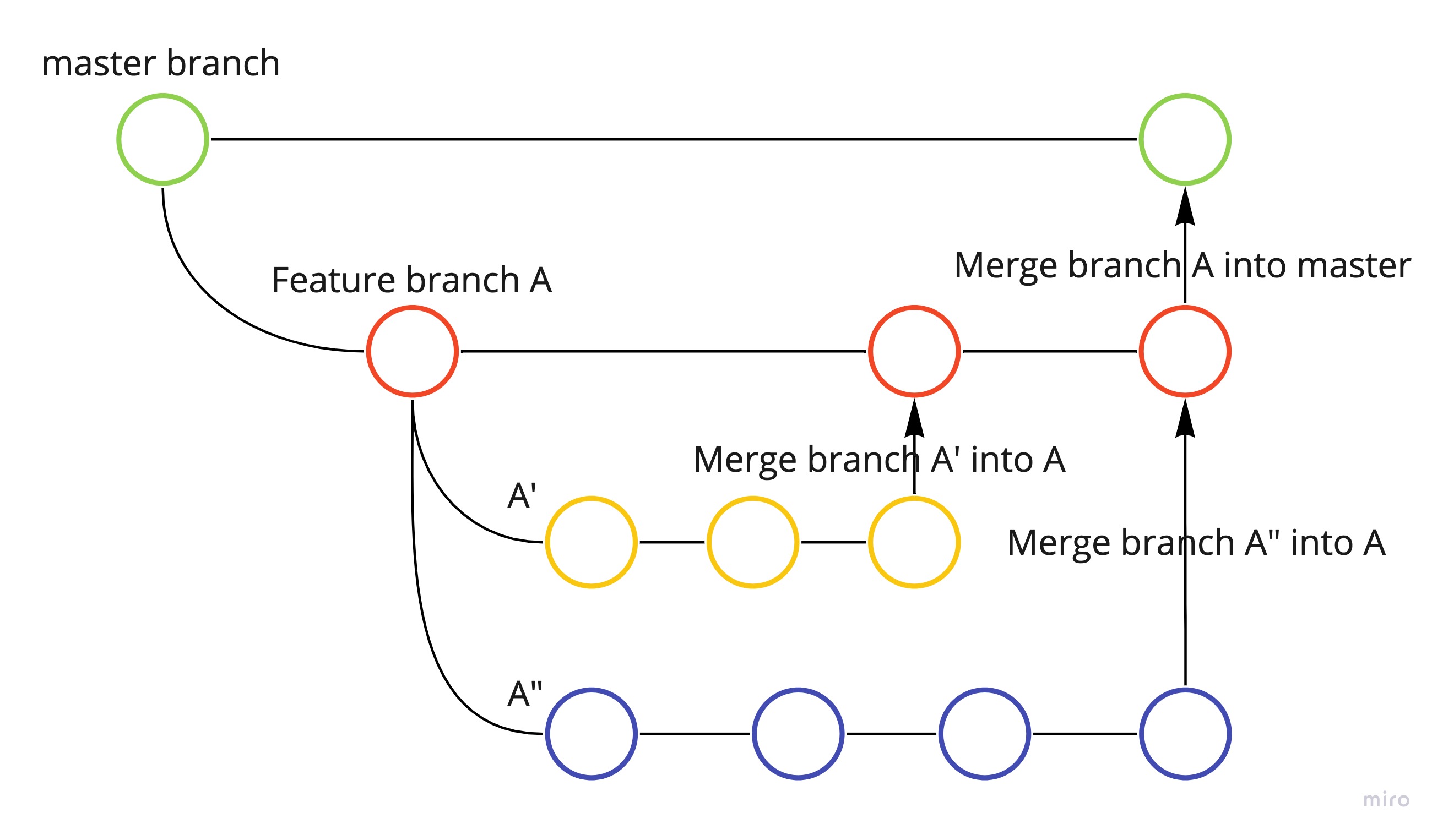

- Leveraging open-source LLM models to experiment

- Developing new interfaces or integrations that make the language model more accessible to employees

- Creating new training programs or resources (OCM) that help employees learn how to use the language model

- Developing new workflows or processes that integrate the language model into existing systems

Prototype:

Once potential solutions have been identified, the next step is to create low-fidelity prototypes that can be tested and refined. This could include:

- Building MVP/MLP (minimum lovable product) using open-source, commercially usable LLM models

- Developing a new interface or integration that can be tested with employees

- Creating a pilot program to test the effectiveness of new training programs or workflows

- Understanding the diverse user groups within the company, their roles, skill levels, and specific requirements when interacting with LLMs. Create user personas to represent these different groups and their needs

Test:

The final stage of design thinking involves testing the prototypes with users to gather feedback and refine the design. This feedback can then be used to iterate further and improve the solution. Testing can be user-based or system-based. Also, it differs for ML and LLM-based solutions.

Addressing integration challenges:

Large language models may need to be integrated with existing systems or workflows to be useful to employees. The product team should consider how to address integration challenges, such as by developing APIs or integrating the language model into existing software platforms.

Ensuring scalability:

Large companies have many employees requiring access to the language models. The development team must prioritize scalability and robust support for a high volume of users when devising the solution. The team can follow a modular approach starting from the MVP phase which helps to pivot the design.

Addressing security concerns:

Employees may unknowingly share sensitive and proprietary information with language models while prompting. Most LLM service providers offer an option to opt-out of data collection, but the risk remains elevated. The security and compliance team should collaborate closely with the GenAI task force to ensure the solution is secure and compliant with relevant regulations and policies. Companies should develop AI policies and provide compliance training for employees.

Thank you!!

Leave a comment